要約

Virtually any digital IC is available in a low-power 3V version today. Analog circuits have traditionally used higher voltage supplies and sometimes dual supplies to achieve higher performance. Generally, it is easier and cheaper to design systems with a single-supply 5V or 3V rail. Lower power operation in low-voltage devices also makes them ideal for battery powered portable instrumentation.

If you design cellular telephones, notebook computers, or other high-volume portable products, you know that the semiconductor industry has been working overtime to help you migrate your designs to low voltage and low power. These days, virtually any digital IC function you could want is available in a low-power 3V version.

This is becoming equally true in the analog world. The ICs and techniques developed for high-volume applications can now leverage the design of medium-volume industrial and medical instruments too. The same low-voltage A/D converter initially developed to read pen position in a PDA can also be used to measure glucose levels in a hand-held medical product, for example.

The Challenge

The challenge is designing the circuitry between a sensor and a system's A/D converter. The problem of obtaining a stable representation of a real-world signal from a sensor is complicated significantly by the constraints of low power and reduced voltage spans. Consider that the standard for precision data acquisition is 12-bit linearity (that's one part in 4,096). That means the least-significant bit in a 2.5V span (3V system) is only 0.6mV.

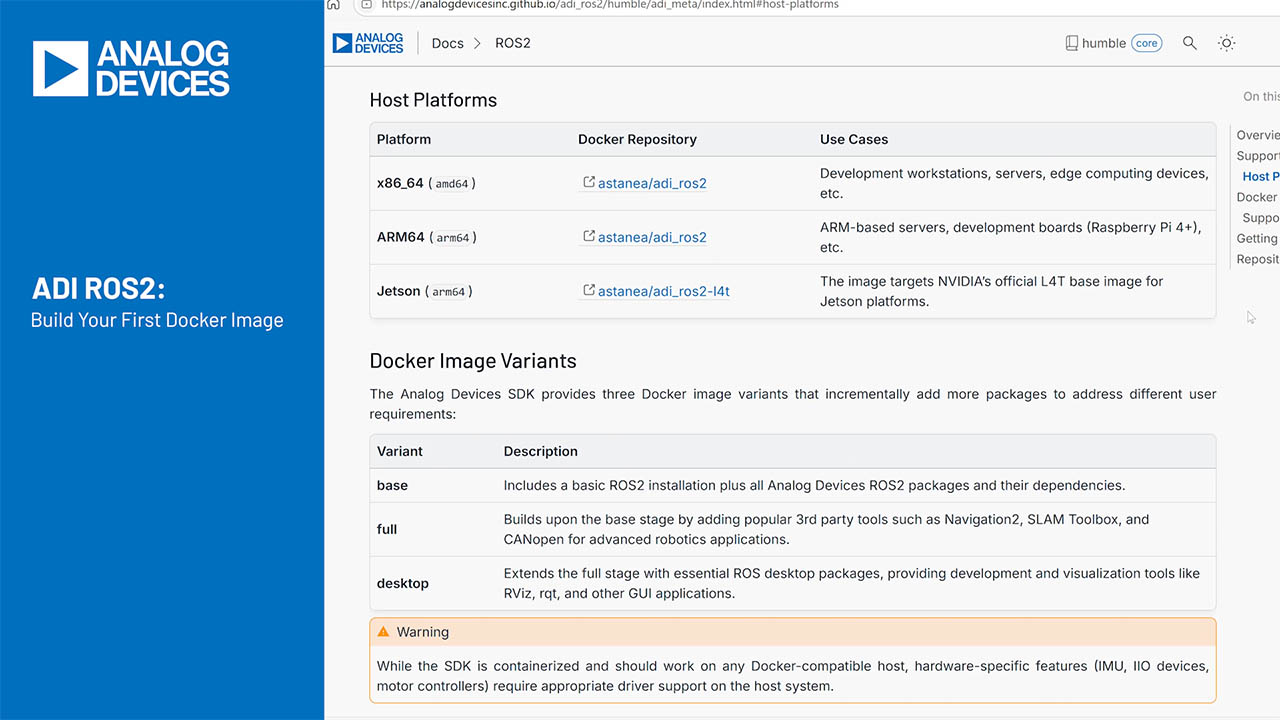

In Figure 1, system supplies are compared with voltage spans. In this diagram, you can see that the relative precision needed to acquire a signal in a single 3V system is eight times' that required in a plus or minus 15V system. The luxury of those wide spans is improved by outstanding op amps, such as the popular OP-07, with a voltage offset of less than 100µV.

Figure 1. The shrinking LSB.

Unfortunately, you can't take your old friends like the OP-07 with you on your voyage to 3V land. They are specified for operation only at plus or minus 15V. Fortunately, it's possible to do precision data acquisition with 3V rails. You'll just have to make new friends and learn new ways to make use of the old ones.

Some designers consider generating higher-voltage power supplies and then porting the resulting design over to the portable realm. These designers find it easier to just make plus or minus 5V or plus or minus 10V supplies than to try to fight the problems of low-voltage design. Though many first-time portable-instrument designers are tempted to do this, there are several reasons why it's not a good approach.

The first and most obvious reason is power. When you triple the main 3V supply and invert it to make plus or minus 9V, for example, the power for the circuit increases by a factor of 10, with efficiency losses taken into account. Also, establishing dual voltage rails with DC-to-DC converters invites conducted and radiated noise problems, which can be hard to eliminate in the close confines of a portable instrument.

The Single-Supply-Rail Goal

Even if dual supplies can be generated using only linear regulators from a pair of 9V batteries, an overall system can become unwieldy—even if noise isn't an issue. Generally, it's easier and cheaper to design systems with a single-supply rail of 5V or 3V. In unusual cases, however, it might be necessary to develop a higher or lower voltage with moderate output-current capability using a small, quiet, and well-shielded capacitive charge pump.

Obviously, the design techniques used for simple single-supply designs are different. The rule is to follow an integrated approach that makes better use of all the resources available in the system. It's especially important to make good use of a microprocessor and software to handle problems that are fixed better using those components.

Study Error Sources

Before embarking on the microprocessor approach, however, study your error sources. Designing any analog system consists of designing around, and minimizing the impact of, error sources. The tools used are some form of error budgeting followed by trial design approaches and system partitioning.

The table below lists some error sources common in a data-acquisition system. The figures in the table represent typical values for a 12-bit system with a 2.5V span, 50kHz of bandwidth, and a gain of 100.

Most of these errors will be familiar to you if you read op-amp data sheets. The errors listed are the same as those on the data sheets, but are manifested in the final system rather than in a single component. Unfortunately, most errors don't scale with supply voltage, which makes them more significant at low voltages.

| System Error Source | Typical System Error Magnitude | Uncorrected 12-Bit LSBs, Parts per 4,096 | Techniques(s) to Reduce the Specified Error Source |

| Offset Voltage | 20mV (Vos = 200 µV at system front end) |

33 | Better front-end amp, low DC gain, auto-zero, offset nulling with DACs, synchronous measurement, choppers |

| Offset-Voltage Drift | 0.5mV/°C | 25 | Same as above, plus active temperature correction |

| Voltage Noise | 1.1mV (50nV/Hz1/2 at system front end) |

2 | Lower-voltage-noise preamplifier (generally bipolar), minimize bandwidth, software averaging |

| Current Noise | 1.5mV 1Mohm 0.1pA/Hz1/2 at system front end |

2 | Low-input-current preamplifier (generally FET), low-impedance sensor/source, minimize bandwidth, software averaging |

| Power-Supply Rejection Ratio | 60dB overall (100dB at DC) |

5 | Power-supply bypassing, better amplifiers, linear or PWM supplies, minimize bandwidth, synchronous measurements |

| Common-Mode Rejection Ratio | 60dB overall at low frequencies | 5 | Careful design, component matching, instrumentation amplifiers, synchronous measurements |

| Gain-Error Linearity | 0.1% | 5 | Higher amplifier loop gain, good passives, better analog switches and muxes, software correction |

| Gain Temperature | 1% 50ppm reference 50ppm system |

20 | Lower TC (temperature coefficient) reference, low TC passives, forced component temperature tracking, no pots, active temperature correction, autocalibration, ratiometric design |

| A/D Errors | 0.1% (TUE* max) | 4 | Better grade of A/D, lower TC reference, larger span, software correction |

*Total unadjusted error

The Significance of Drift

In most cases, the absolute magnitude of an error is less important than its drift over temperature or time. This is especially true in portable instrumentation using a closely coupled microprocessor or microcontroller. Error sources due to gain error, offset error, or nonlinear characteristics of a sensor or some other portion of a system should be corrected in software.

Attempts at linearization, precise span, and zero adjustments in the analog domain don't make sense. The analog-hardware design task is simplified today for two major reasons. First, processor horsepower is very inexpensive. It's much easier to do sensor linearization in software, even if a crude lookup-table approach is used. Second, reduced spans make many of the more-clever analog-circuit tricks much harder to do, especially for the analog engineer who isn't an expert.

Using the processor approach, error sources need only be managed well enough to produce repeatable and stable inputs to your A/D converter and CPU. This still requires significant effort, attention to detail, and analog-design skill. You must truly consider the A/D converter, CPU, and software algorithm as part of the sensor-acquisition circuit, rather than as a smart voltmeter that follows it.

Internal Interfaces

Most examples of sensor-based circuits create extra error in the effort to make the final output a nice zero-to-x-volt range. Although this is needed in an industrial 4mA to 20mA transmitter, for example, it's totally unnecessary to have such clean internal interfaces in an embedded portable instrument.

When it comes to a microprocessor, it really doesn't matter if the analog circuit's range is 0.2346V to 2.4139V, for example, and its output is related to the measured parameter by a quadratic formula. Once these absolute voltages are converted to numbers by the A/D converter, massaging these numbers through a simple formula or lookup is easily accomplished in software. If it isn't, you had better spend some time simplifying the software, not making the analog circuit more complex.

See Figure 2. This block diagram of the data-acquisition portion of a typical sensor-based portable instrument includes a CPU and system software. It's much easier to do sensor linearization in software, even if a crude lookup-table approach is used. Even modestly powerful processors can also handle synchronous demodulation, averaging, histogramming, and some DSP. Look at the purpose of these blocks with an eye toward trade-off in key areas.

Figure 2. A generic sensor data-acquisition block.

Let's consider the sensor first. It's important to understand the physics and the equivalent circuit of the sensor in order to effectively design an interface for it. Many sensors utilize a traditional excitation source that may not be necessary in a portable instrument.

A good example is the 1mA source often used to excite platinum RTD (resistance temperature detector) temperature-measuring sensors. A resistor can be substituted for the current source, with the voltage-divider effect removed in software when other corrections are made.

The first block in the signal chain is the preamplifier. It amplifies or buffers the raw sensor signal to maintain maximum signal-to-noise ratio. This portion of the circuit requires the greatest care in design and the most knowledge of the sensor's equivalent circuit. There are classic preamps for given sensors, as with excitation circuits. These can usually be implemented with modern low-power components.

For systems with very high DC gain and finite offsets that would "rail" the signal-processing chain, an offset D/A converter can be a good way to zero the system. But this should be avoided if possible.

Postamp Requirements

The next block in the chain is the postamp. This device provides simple gain, assuming that the preamp successfully raised the input signal out of the mud. The gain of this block can be adjustable if necessary, but it's generally better to use more A/D converter resolution than to resort to programmable gain. If precision is important, resort to Rail-to-Rail® input and output amplifiers only where necessary. These parts generally have complex inputs with crossover regions that can make precision operation difficult.

The postamp is often followed by a filter stage. This filter is designed to band-limit the input signal to less than half the A/D sampling frequency, when required.

The sample-and-hold, reference, and A/D converter functions are usually available in a single chip. Their error sources should be considered separately, though. Most modern A/D converters have highly capacitive inputs that can be difficult to drive.

For designs that aren't critical, the internal reference in many converters is a convenient feature. But for precision designs, think of an external reference as almost a necessity. It's even better to eliminate the reference altogether. Consider a ratiometric design where the reference is derived from the excitation signal. The example that follows uses this technique.

Finally, look at the CPU and the software. Because the CPU is embedded in the system, CPU-intensive techniques can and should be used. Synchronous demodulation, averaging, histogramming, fast Fourier transforms (FFTs), or other DSP methods should be considered—even when using modest microcontrollers.

An Example: A Portable RTD Interface Application

Here's a design example using some of the techniques discussed above. It's an RTD (resistance temperature detector) thermometer that has a range of -50°C to +175°C with a resolution of better than 0.1°C. This single-supply 3V design draws an average current of 25µA while making 20 conversions/sec.

Sensor RT1 is a platinum RTD with a nominal resistance of 100 ohms (at 0°C). Its resistance is 80.3 ohms at -50°C and 170.3 ohms at +185°C. (RTDs aren't linear; they follow a very well defined parabolic curve to within a small fraction of a degree.)

The excitation source consists of resistors R4 and R5 as part of a bridge. They limit current to approximately 1mA through the RTD. The tap between R4 and R5 is used to derive a ratiometric reference. The excitation is controlled by transistor Q1. It also provides on/off control to save power. Note that the R3 and R12 string on the right side of the bridge also draws about 1mA. The sensor subsystem therefore draws 2mA when on. The dual op amp is powered from the same switched rail, but its 34µA drain is negligible compared to the resistor currents.

U1B (half of a Maxim MAX478 op amp) comprises the preamplifier and postamp (only one stage is required here). The MAX478 is specified for operation below 3V and has a DC offset comparable to an OP-07. It draws only 17µA of supply current. Although it isn't a Rail-to-Rail op amp, it can sense at ground and within a volt of the positive rail. When powered with a 3V supply, its output can swing to 2.2V when loaded lightly.

In this application, the MAX478 is configured as a gain-of-20 difference amplifier. It subtracts the voltage across R12, which corrects for the offset resistance of 80.3 ohms at the RTD. Once the offset is eliminated, the diff-amp amplifies the changes in the RTD voltage with a gain of 19. This makes the output 1.8V full scale at 175°C.

No filter is required due to the slowly responding nature of the RTD. Moreover, interference from external sources (such as 60Hz lines) isn't an issue due to the sensor's low impedance.

The A/D converter is a Maxim MAX147. It's an eight-channel 12-bit converter chip specified to operate down to 2.7V and was initially intended for use in PDAs. It includes the sample-and-hold and is interfaced to the CPU over a 3-wire SPI serial interface. Current drain is about 1mA when active, dropping to about 10µA in shutdown. The MAX147 is capable of conversion rates of 100ksamples/sec. This speed enhances system power savings, as the A/D can sleep between conversions. Converting at 20 conversions/sec, it operates just 0.02% of the time. The circuit draws an average current of only 25µA at this rate.

The A/D has no internal reference and is operated ratiometrically. Op-amp U1A is used to buffer a 1.9V nominal point in the circuit for the A/D. The op amp is necessary, because the A/D converter draws 100µA (into its reference pin). The circuit on the output of this op-amp stage permits driving the large bypass capacitance without becoming unstable. (It isolates the capacitance with a resistor; separate AC and DC feedback paths maintain DC accuracy and AC stability.) This network isn't necessary on the other amplifier section, because the DC current drawn into the A/D inputs is very small; only isolation of the capacitive load is needed.

Figure 3. RTD Schematic.

Making Absolute Measurements

One of the problems with using an A/D converter ratiometrically is that it can no longer be used to make absolute measurements, such as when measuring a battery. The trick is to measure a fixed reference and compare the reading to the unknown (see the small circuit enclosed in dotted lines at the bottom of Figure 3).

To accomplish this, the battery voltage scaled by the two resistors R9 and R10 is applied to the A/D (on channel CH1) and converted. The reference (derived with a MAX6120) is converted on the second channel (CH2). The ratio of the reference reading to the battery reading is used to give an indication of the battery voltage.

The alternative to this scheme would be to use an analog switch to connect a reference when required. For the simple requirement of battery-voltage monitoring, however, the circuit shown here works nicely.

A similar version of this article appeared in the July 1996 issue of Portable Design magazine.