Can You Use the Voltage Reference to Power Your ADC Driver?

Can You Use the Voltage Reference to Power Your ADC Driver?

by

Kris Lokere

Jul 11 2013

Linear Technology’s family of 16-, 18-, and 20-Bit SAR ADCs (LTC2378-20 family) operates with an external reference voltage of up to 5V. The largest input voltage that you are then supposed to put on each ADC input pin is equal to that 5V reference voltage. It seems logical to use an opamp on a 5V supply with rail-to-rail output swing (such as the differential LTC6362), because then you know for sure that you cannot overdrive or damage the ADC inputs.

But in a practical design, you may also want to limit the number of power supply rails. The ADC core already needs a 2.5V supply. The voltage reference (such as LTC6655-5) needs a supply that is higher than 5V. Rather than using yet another, third, analog supply rail to power the opamp, it is tempting to use the 5V output of the voltage reference to also power the opamp. After all, it is right there, it is at the right voltage, and the LTC6655 reference has a nice low noise output.

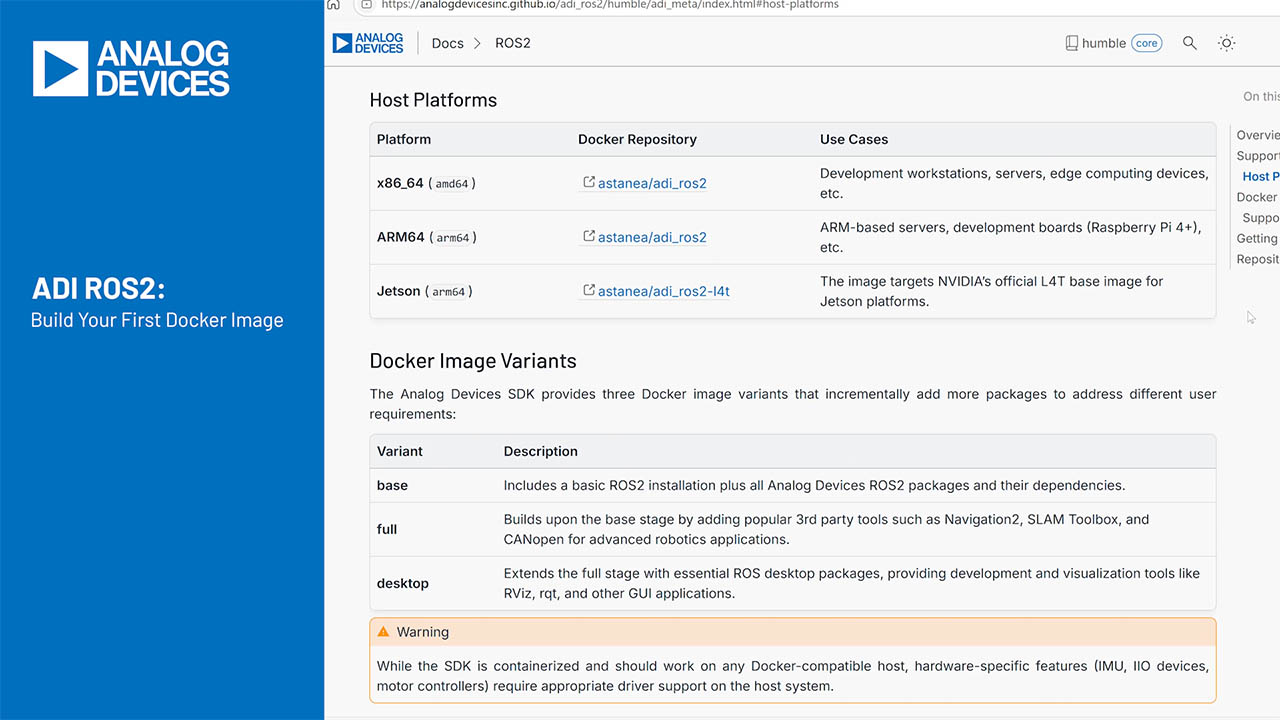

So we decided to give it a try. Figure 1 shows the circuit. The LTC6655-5 reference is powered from the board’s input voltage (in this case a lab supply set to 9V). The LT1763 LDO powers the ADC core at 2.5V. The LTC6362 differential opamp is powered from the 5V reference. The circuit works. No matter what you do at the inputs, you will never drive more voltage into the ADC than the reference voltage. The SNR (signal to noise ratio) is as expected at 102dB, which is just below the SNR of the 20-bit ADC, because the amplifier and resistors have some noise as well. (You can trade-off SNR and bandwidth by choosing different values for the unmarked R’s and C’s between the amplifier and the ADC).

Figure 1. ADC Driver Powered By Voltage Reference.

If you want a low noise, compact, 1Msps, 20-bit data-acquisition circuit that works, then Figure 1 is it, so you can stop reading now. But if you also want to take advantage of the amazingly low INL (2ppm max) of the 20-bit ADC, then it gets more interesting. The LTC6362 draws supply current from the voltage reference. The magnitude of that current is a function of the analog voltage signal. This is mostly because current flows through the 1k feedback resistors, and this current must come from the amplifier’s supply pin, and therefore from the reference output. The voltage reference has a non-zero output impedance (also called load regulation in the datasheet). The signal-dependent supply current combines with the reference output impedance to cause a signal-dependent change in the reference voltage. The ADC digitizes the ratio of the input voltage to the reference voltage. When the input voltage gets larger, the reference voltages gets smaller, so the ratio changes non-linearly, and you get a little bit of distortion. We measured the Integral Nonlinearity (INL) of the circuit using a 2kHz sine wave input, and achieved about ±16ppm maximum INL. This is significant compared to the ±2ppm max INL of the 20-bit LTC2378-20, but it can be almost unnoticeable for most 16-bit applications (±1LSB). If we lower the input frequency to 200Hz, where the LTC6655 reference has lower output impedance, then the INL improves to ±4ppm.

In summary, for 16-Bit or 18-Bit performance with moderate input signal frequency, you can save a supply rail by powering the amplifier directly from the reference voltage. But to achieve the full linearity performance of the 20-bit ADCs, especially at somewhat higher input frequencies, you should provide a separate supply for the amplifier, so that the reference voltage does not get modulated by the signal source.

About the Authors

Kris Lokere is a strategic applications manager for signal chain products, joining ADI as part of the merger with Linear Technology. Kris enjoys architecting systems that combine technologies from multiple product lines. I...