AN-2616: 使用MAX78000/MAX78002 进行面部识别

摘要

本应用笔记描述了一种利用人工智能(AI)微控制器MAX78000/MAX78002实现面部识别应用的方法,由三个独立的模型组成:面部检测CNN模型、面部识别CNN模型和点积模型。

引言

MAX78000 [1]和 MAX78002 [2]是 AI 微控制器,包含一个超低功耗的卷积神经网络(CNN)推理引擎,用于在电池供电的物联网(IoT)设备上运行AI边缘应用。这些微控制器可执行许多复杂的CNN网络,以满足关键性能要求。

本文描述了一种基于单一面部识别应用的方法,调用以下三个CNN模型,分别执行不同的任务:

- 面部检测CNN模型检测所捕获图像中的面部,并提取仅包含一个面部的矩形子图像。

- 面部识别CNN模型通过为给定的面部图像生成嵌入向量(embedding),来识别图像中的人物。

- 点积模型会输出表示给定图像的嵌入向量与数据库中的嵌入向量之间相似度的点积。

模型可以使用点积相似度作为距离度量,从而根据嵌入向量的距离,将图像识别为某个已知对象或标记为“未知”。

MAX78000 面部识别应用

面部识别应用[4]只能在MAX78000 Feather板[3]上运行,因为该板支持SD卡。

面部检测、面部识别 和点积 模型按顺序依次执行。

本应用的难点在于,当所有模型超过MAX78000 CNN引擎的8位权重容量432KB及MAX78000内部闪存的存 储限制时,该如何利用这些模型。在此示例中,面部检测和 点积模型的权重存储在MAX78000内部闪存中,而 面部识别CNN模型的权重存储在外部SD存储卡中,并且在检测到面部时立即重新加载。

SDHC_weights 子项目可将面部识别CNN权重(weights_2.h)以二进制格式存储在SD卡中。

面部检测

面部检测CNN模型有16层,使用168x224 RGB图像作为输入。

Face Detection CNN:

SUMMARY OF OPS

Hardware: 589,595,888 ops (588,006,720 macc; 1,589,168 comp; 0 add; 0 mul; 0 bitwise)

Layer 0: 4,327,680 ops (4,064,256 macc; 263,424 comp; 0 add; 0 mul; 0 bitwise)

Layer 1: 11,063,808 ops (10,838,016 macc; 225,792 comp; 0 add; 0 mul; 0 bitwise)

Layer 2: 43,502,592 ops (43,352,064 macc; 150,528 comp; 0 add; 0 mul; 0 bitwise)

Layer 3: 86,854,656 ops (86,704,128 macc; 150,528 comp; 0 add; 0 mul; 0 bitwise)

Layer 4: 86,854,656 ops (86,704,128 macc; 150,528 comp; 0 add; 0 mul; 0 bitwise)

Layer 5: 86,854,656 ops (86,704,128 macc; 150,528 comp; 0 add; 0 mul; 0 bitwise)

Layer 6: 173,709,312 ops (173,408,256 macc; 301,056 comp; 0 add; 0 mul; 0 bitwise)

Layer 7 (backbone_conv8): 86,779,392 ops (86,704,128 macc; 75,264 comp; 0 add; 0 mul; 0 bitwise)

Layer 8 (backbone_conv9): 5,513,088 ops (5,419,008 macc; 94,080 comp; 0 add; 0 mul; 0 bitwise)

Layer 9 (backbone_conv10): 1,312,640 ops (1,290,240 macc; 22,400 comp; 0 add; 0 mul; 0 bitwise)

Layer 10 (conv12_1): 647,360 ops (645,120 macc; 2,240 comp; 0 add; 0 mul; 0 bitwise)

Layer 11 (conv12_2): 83,440 ops (80,640 macc; 2,800 comp; 0 add; 0 mul; 0 bitwise)

Layer 12: 1,354,752 ops (1,354,752 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 13: 40,320 ops (40,320 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 14: 677,376 ops (677,376 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 15: 20,160 ops (20,160 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

RESOURCE USAGE

Weight memory: 275,184 bytes out of 442,368 bytes total (62%)

Bias memory: 536 bytes out of 2,048 bytes total (26%)

每次执行之前,相应的CNN权重、偏置和配置都会加载到CNN引擎中。

// Power off CNN after unloading result to clear all CNN registers

// It is needed to load and run other CNN models

cnn_disable();

// Enable CNN peripheral, enable CNN interrupt, turn on CNN clock

// CNN clock: 50MHz div 1

cnn_enable(MXC_S_GCR_PCLKDIV_CNNCLKSEL_PCLK, MXC_S_GCR_PCLKDIV_CNNCLKDIV_DIV1);

/* Configure CNN_1 to detect a face */

cnn_1_init(); // Bring CNN state machine into consistent state

cnn_1_load_weights(); // Load CNN kernels

cnn_1_load_bias(); // Load CNN bias

cnn_1_configure(); // Configure CNN state machine

面部检测CNN模型的输出是边界框的坐标及其置信度分数。非极大值抑制(NMS)算法会选择置信度分数最高的边界框,并将其显示在TFT上。

如果面部检测CNN模型检测到了面部,则只包含一个面部的矩形子图像会被调整为112x112 RGB图像,以匹配面部识别 CNN模型的输入。

面部识别

面部识别CNN模型有17层,输入为112x112 RGB。

Face Identification CNN:

SUMMARY OF OPS

Hardware: 199,784,640 ops (198,019,072 macc; 1,746,752 comp; 18,816 add; 0 mul; 0 bitwise)

Layer 0: 11,239,424 ops (10,838,016 macc; 401,408 comp; 0 add; 0 mul; 0 bitwise)

Layer 1: 29,403,136 ops (28,901,376 macc; 501,760 comp; 0 add; 0 mul; 0 bitwise)

Layer 2: 58,003,456 ops (57,802,752 macc; 200,704 comp; 0 add; 0 mul; 0 bitwise)

Layer 3: 21,876,736 ops (21,676,032 macc; 200,704 comp; 0 add; 0 mul; 0 bitwise)

Layer 4: 7,375,872 ops (7,225,344 macc; 150,528 comp; 0 add; 0 mul; 0 bitwise)

Layer 5: 21,826,560 ops (21,676,032 macc; 150,528 comp; 0 add; 0 mul; 0 bitwise)

Layer 6: 1,630,720 ops (1,605,632 macc; 25,088 comp; 0 add; 0 mul; 0 bitwise)

Layer 7: 14,450,688 ops (14,450,688 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 8: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 9: 12,544 ops (0 macc; 0 comp; 12,544 add; 0 mul; 0 bitwise)

Layer 10: 3,261,440 ops (3,211,264 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 11: 10,888,192 ops (10,838,016 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 12: 912,576 ops (903,168 macc; 9,408 comp; 0 add; 0 mul; 0 bitwise)

Layer 13: 10,838,016 ops (10,838,016 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 14: 809,088 ops (802,816 macc; 6,272 comp; 0 add; 0 mul; 0 bitwise)

Layer 15: 7,225,344 ops (7,225,344 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 16: 22,656 ops (16,384 macc; 0 comp; 6,272 add; 0 mul; 0 bitwise)

Layer 17: 8,192 ops (8,192 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

RESOURCE USAGE

Weight memory: 365,408 bytes out of 442,368 bytes total (82.6%)

Bias memory: 1,296 bytes out of 2,048 bytes total (63.3%)

加载 面部识别CNN的配置、权重和偏置之前,必须清除CNN引擎状态机和内存,以避免其受到之前CNN模型 执行的影响。一种清除方法是通过调用cnn_disable()函数来关闭CNN引擎。

// Power off CNN after unloading result to clear all CNN registers

// It is needed to load and run other CNN models

cnn_disable();

// Enable CNN peripheral, enable CNN interrupt, and turn on CNN clock

// CNN clock: 50MHz div 1

cnn_enable(MXC_S_GCR_PCLKDIV_CNNCLKSEL_PCLK, MXC_S_GCR_PCLKDIV_CNNCLKDIV_DIV1);

/* Configure CNN_2 to recognize a face */

cnn_2_init(); // Bring CNN state machine into consistent state

cnn_2_load_weights_from_SD(); // Load CNN kernels from SD card

cnn_2_load_bias(); // Reload CNN bias

cnn_2_configure(); // Configure CNN state machine

面部识别CNN模型的输出是一个长度为64的嵌入向量,对应于面部图像。将嵌入向量作为输入提供给点积模 型之前,向量会进行L2归一化。

点积

点积模型有一个线性层,使用长度为64的嵌入向量作为输入。

Dot Product CNN:

SUMMARY OF OPS

Hardware: 65,536 ops (65,536 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 0: 65,536 ops (65,536 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

RESOURCE USAGE

Weight memory: 65,536 bytes out of 442,368 bytes total (14.8%)

Bias memory: 0 bytes out of 2,048 bytes total (0.0%)

同样,在加载点积CNN的配置、权重和偏置之前,必须清除CNN引擎状态机和内存。

// Power off CNN after unloading result to clear all CNN registers

// It is needed to load and run other CNN models

cnn_disable();

// Enable CNN peripheral, enable CNN interrupt, turn on CNN clock

// CNN clock: 50MHz div 1

cnn_enable(MXC_S_GCR_PCLKDIV_CNNCLKSEL_PCLK, MXC_S_GCR_PCLKDIV_CNNCLKDIV_DIV1);

/* Configure CNN_3 for dot product */

cnn_3_init(); // Bring CNN state machine into consistent state

cnn_3_load_weights(); // Load CNN kernels

cnn_3_load_bias(); // Reload CNN bias

cnn_3_configure(); // Configure CNN state machine

点积 模型的输出是1024个点积相似度,每个相似度表示给定面部与数据库中记录的面部之间的相似性。如果点积相似度的最大值高于阈值,则具有最大相似度的对象将被判定为所识别的人脸,并显示在TFT上。否则,输 出将是“未知”。

MAX78002 面部识别应用

面部识别应用[5]在MAX78002评估(EV)套件板[6]上运行。

与MAX78000 应用类似, 面部检测、面部识别和点积 模型按顺序依次执行。然而,MAX78002具有更大的内部闪存,可以存储模型的所有权重。

本应用仅在初始化时加载所有权重和层配置,每次推理时仅重新加载偏置。通过这种方法,模型切换的开销大大减少。

为了将所有模型统一存放在CNN内存中,必须以相似的方式安排各层的偏移量,使模型彼此相连。在此示例中,层偏移量的安排如表1所示。

| CNN 模型 | 起始层 | 结束层 |

| 面部识别 | 0 | 72 |

| 点积 | 73 | 73 |

| 面部检测 | 74 | 89 |

使用该方法时需注意,MAX78002支持的最大层数为128层,不得超过此限制。

在合成阶段,若模型的起始层不为0,则必须在network.yaml文件中的模型层之前添加直通层。network.yaml文件的示例可以在AI8x-Synthesis存储库[7]中找到。

对于本应用,另一个需要考虑的因素是权重偏移量的调整。MAX78002有64个并行处理器,每个处理器有4096个CNN内核,可存储9个8位精度的参数。调整权重偏移量时,应考虑前一个模型的内核内存使用情况。

在此示例中,权重偏移量的安排如表2所示。

| CNN 模型 | 权重偏移量 | 内核数 |

| 面部识别 | 0 | 1580 |

| 点积 | 2000 | 114 |

| 面部检测 | 2500 |

在合成阶段,可以使用“–start-layer”和“–weight-start”参数来添加直通层和权重偏移量。合成脚本的示例 参见AI8x-Synthesis 存储库[7]。

初始化时,所有模型权重和配置都会加载。

cnn_1_enable(MXC_S_GCR_PCLKDIV_CNNCLKSEL_IPLL, MXC_S_GCR_PCLKDIV_CNNCLKDIV_DIV4);

cnn_1_init(); // Bring CNN state machine into consistent state

cnn_1_load_weights(); // Load kernels of CNN_1

cnn_1_configure(); // Configure CNN_1 layers

cnn_2_load_weights(); // Load kernels of CNN_2

cnn_2_configure(); // Configure CNN_2 layers

cnn_3_load_weights(); // Load kernels of CNN_3

cnn_3_configure(); // Configure CNN_3 layers

面部检测

面部检测CNN模型有16层,使用168x224 RGB图像作为输入。

Face Detection CNN: SUMMARY OF OPS Hardware: 589,595,888 ops (588,006,720 macc; 1,589,168 comp; 0 add; 0 mul; 0 bitwise)

Layer 74: 4,327,680 ops (4,064,256 macc; 263,424 comp; 0 add; 0 mul; 0 bitwise)

Layer 75: 11,063,808 ops (10,838,016 macc; 225,792 comp; 0 add; 0 mul; 0 bitwise)

Layer 76: 43,502,592 ops (43,352,064 macc; 150,528 comp; 0 add; 0 mul; 0 bitwise)

Layer 77: 86,854,656 ops (86,704,128 macc; 150,528 comp; 0 add; 0 mul; 0 bitwise)

Layer 78: 86,854,656 ops (86,704,128 macc; 150,528 comp; 0 add; 0 mul; 0 bitwise)

Layer 79: 86,854,656 ops (86,704,128 macc; 150,528 comp; 0 add; 0 mul; 0 bitwise)

Layer 80: 173,709,312 ops (173,408,256 macc; 301,056 comp; 0 add; 0 mul; 0 bitwise)

Layer 81 (backbone_conv8): 86,779,392 ops (86,704,128 macc; 75,264 comp; 0 add; 0 mul; 0 bitwise)

Layer 82 (backbone_conv9): 5,513,088 ops (5,419,008 macc; 94,080 comp; 0 add; 0 mul; 0 bitwise)

Layer 83 (backbone_conv10): 1,312,640 ops (1,290,240 macc; 22,400 comp; 0 add; 0 mul; 0 bitwise)

Layer 84 (conv12_1): 647,360 ops (645,120 macc; 2,240 comp; 0 add; 0 mul; 0 bitwise)

Layer 85 (conv12_2): 83,440 ops (80,640 macc; 2,800 comp; 0 add; 0 mul; 0 bitwise)

Layer 86: 1,354,752 ops (1,354,752 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 87: 40,320 ops (40,320 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 88: 677,376 ops (677,376 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 89: 20,160 ops (20,160 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

RESOURCE USAGE

Weight memory: 275,184 bytes out of 2,396,160 bytes total (11%)

Bias memory: 536 bytes out of 8,192 bytes total (7%)

当本应用以面部检测模型启动时,相应的偏置值在每次执行之前加载到CNN引擎中。然后,执行面部检测的 CNN_1 和FIFO 配置。

cnn_1_load_bias(); // Load bias data of CNN_1

// Bring CNN_1 state machine of Face Detection model into consistent state

*((volatile uint32_t *) 0x51000000) = 0x00100008; // Stop SM

*((volatile uint32_t *) 0x51000008) = 0x00004a59; // Layer count

*((volatile uint32_t *) 0x52000000) = 0x00100008; // Stop SM

*((volatile uint32_t *) 0x52000008) = 0x00004a59; // Layer count

*((volatile uint32_t *) 0x53000000) = 0x00100008; // Stop SM

*((volatile uint32_t *) 0x53000008) = 0x00004a59; // Layer count

*((volatile uint32_t *) 0x54000000) = 0x00100008; // Stop SM

*((volatile uint32_t *) 0x54000008) = 0x00004a59; // Layer count

// Disable FIFO control

*((volatile uint32_t *) 0x50000000) = 0x00000000;

与MAX78000应用类似,面部检测 CNN模型的输出是一组边界框坐标和相应的置信度分数。非极大值抑制(NMS)算法会选择置信度分数最高的边界框并将其显示在TFT上。

如果面部检测CNN模型检测到面部,则仅包含一个面部的矩形子图像会被选中并调整为112x112 RGB图像,以匹配面部识别CNN模型的输入。

面部识别

面部识别别CNN模型有73层,使用112x112 RGB图像作为输入。

Face Identification CNN:

SUMMARY OF OPS

Hardware: 445,470,720 ops (440,252,416 macc; 4,848,256 comp; 370,048 add; 0 mul; 0 bitwise)

Layer 0: 22,478,848 ops (21,676,032 macc; 802,816 comp; 0 add; 0 mul; 0 bitwise)

Layer 1: 2,809,856 ops (1,806,336 macc; 1,003,520 comp; 0 add; 0 mul; 0 bitwise)

Layer 2: 231,612,416 ops (231,211,008 macc; 401,408 comp; 0 add; 0 mul; 0 bitwise)

Layer 3: 1,404,928 ops (903,168 macc; 501,760 comp; 0 add; 0 mul; 0 bitwise)

Layer 4: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 5: 6,522,880 ops (6,422,528 macc; 100,352 comp; 0 add; 0 mul; 0 bitwise)

Layer 6: 1,003,520 ops (903,168 macc; 100,352 comp; 0 add; 0 mul; 0 bitwise)

Layer 7: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 8: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 9: 50,176 ops (0 macc; 0 comp; 50,176 add; 0 mul; 0 bitwise)

Layer 10: 6,522,880 ops (6,422,528 macc; 100,352 comp; 0 add; 0 mul; 0 bitwise)

Layer 11: 1,003,520 ops (903,168 macc; 100,352 comp; 0 add; 0 mul; 0 bitwise)

Layer 12: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 13: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 14: 50,176 ops (0 macc; 0 comp; 50,176 add; 0 mul; 0 bitwise)

Layer 15: 6,522,880 ops (6,422,528 macc; 100,352 comp; 0 add; 0 mul; 0 bitwise)

Layer 16: 1,003,520 ops (903,168 macc; 100,352 comp; 0 add; 0 mul; 0 bitwise)

Layer 17: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 18: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 19: 50,176 ops (0 macc; 0 comp; 50,176 add; 0 mul; 0 bitwise)

Layer 20: 6,522,880 ops (6,422,528 macc; 100,352 comp; 0 add; 0 mul; 0 bitwise)

Layer 21: 1,003,520 ops (903,168 macc; 100,352 comp; 0 add; 0 mul; 0 bitwise)

Layer 22: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 23: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 24: 50,176 ops (0 macc; 0 comp; 50,176 add; 0 mul; 0 bitwise)

Layer 25: 13,045,760 ops (12,845,056 macc; 200,704 comp; 0 add; 0 mul; 0 bitwise)

Layer 26: 702,464 ops (451,584 macc; 250,880 comp; 0 add; 0 mul; 0 bitwise)

Layer 27: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 28: 6,472,704 ops (6,422,528 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 29: 501,760 ops (451,584 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 30: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 31: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 32: 25,088 ops (0 macc; 0 comp; 25,088 add; 0 mul; 0 bitwise)

Layer 33: 6,472,704 ops (6,422,528 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 34: 501,760 ops (451,584 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 35: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 36: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 37: 25,088 ops (0 macc; 0 comp; 25,088 add; 0 mul; 0 bitwise)

Layer 38: 6,472,704 ops (6,422,528 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 39: 501,760 ops (451,584 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 40: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 41: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 42: 25,088 ops (0 macc; 0 comp; 25,088 add; 0 mul; 0 bitwise)

Layer 43: 6,472,704 ops (6,422,528 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 44: 501,760 ops (451,584 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 45: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 46: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 47: 25,088 ops (0 macc; 0 comp; 25,088 add; 0 mul; 0 bitwise)

Layer 48: 6,472,704 ops (6,422,528 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 49: 501,760 ops (451,584 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 50: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 51: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 52: 25,088 ops (0 macc; 0 comp; 25,088 add; 0 mul; 0 bitwise)

Layer 53: 6,472,704 ops (6,422,528 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 54: 501,760 ops (451,584 macc; 50,176 comp; 0 add; 0 mul; 0 bitwise)

Layer 55: 6,422,528 ops (6,422,528 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 56: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 57: 25,088 ops (0 macc; 0 comp; 25,088 add; 0 mul; 0 bitwise)

Layer 58: 12,945,408 ops (12,845,056 macc; 100,352 comp; 0 add; 0 mul; 0 bitwise)

Layer 59: 351,232 ops (225,792 macc; 125,440 comp; 0 add; 0 mul; 0 bitwise)

Layer 60: 3,211,264 ops (3,211,264 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 61: 1,618,176 ops (1,605,632 macc; 12,544 comp; 0 add; 0 mul; 0 bitwise)

Layer 62: 125,440 ops (112,896 macc; 12,544 comp; 0 add; 0 mul; 0 bitwise)

Layer 63: 1,605,632 ops (1,605,632 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 64: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 65: 6,272 ops (0 macc; 0 comp; 6,272 add; 0 mul; 0 bitwise)

Layer 66: 1,618,176 ops (1,605,632 macc; 12,544 comp; 0 add; 0 mul; 0 bitwise)

Layer 67: 125,440 ops (112,896 macc; 12,544 comp; 0 add; 0 mul; 0 bitwise)

Layer 68: 1,605,632 ops (1,605,632 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 69: 0 ops (0 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 70: 6,272 ops (0 macc; 0 comp; 6,272 add; 0 mul; 0 bitwise)

Layer 71: 809,088 ops (802,816 macc; 6,272 comp; 0 add; 0 mul; 0 bitwise)

Layer 72: 14,464 ops (8,192 macc; 0 comp; 6,272 add; 0 mul; 0 bitwise)

RESOURCE USAGE

Weight memory: 909,952 bytes out of 2,396,160 bytes total (38.0%)

Bias memory: 7,296 bytes out of 8,192 bytes total (89.1%)

要运行面部识别 模型,相应的偏置值应在每次执行之前加载到CNN中。然后,执行面部识别的CNN_2和FIFO 配置。

cnn_2_load_bias(); // Load bias data of CNN_2

// Bring CNN_2 state machine of Face ID model into consistent state

*((volatile uint32_t *) 0x51000000) = 0x00108008; // Stop SM

*((volatile uint32_t *) 0x51000008) = 0x00000048; // Layer count

*((volatile uint32_t *) 0x52000000) = 0x00108008; // Stop SM

*((volatile uint32_t *) 0x52000008) = 0x00000048; // Layer count

*((volatile uint32_t *) 0x53000000) = 0x00108008; // Stop SM

*((volatile uint32_t *) 0x53000008) = 0x00000048; // Layer count

*((volatile uint32_t *) 0x54000000) = 0x00108008; // Stop SM

*((volatile uint32_t *) 0x54000008) = 0x00000048; // Layer count

// Enable FIFO control

*((volatile uint32_t *) 0x50000000) = 0x00001108; // FIFO control

面部识别CNN模型的输出是一个长度为64的嵌入向量,对应于输入的面部图像。将嵌入向量作为输入提供给 点积模型之前,向量会进行L2归一化。

点积

点积模型有一个线性层,使用长度为64的嵌入向量作为输入。

Dot Product CNN:

SUMMARY OF OPS

Hardware: 65,536 ops (65,536 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

Layer 73: 65,536 ops (65,536 macc; 0 comp; 0 add; 0 mul; 0 bitwise)

RESOURCE USAGE

Weight memory: 65,536 bytes out of 2,396,160 bytes total (2.7%)

Bias memory: 0 bytes out of 8,192 bytes total (0.0%)

要运行点积 模型,相应的偏置值应在每次执行之前加载到CNN引擎中。然后,执行点积的CNN_3和FIFO 配置。

cnn_3_load_bias(); // Load bias data of CNN_3

//Dot product CNN state machine configuration

*((volatile uint32_t *) 0x51000000) = 0x00100008; // Stop SM

*((volatile uint32_t *) 0x51000008) = 0x00004949; // Layer count

*((volatile uint32_t *) 0x52000000) = 0x00100008; // Stop SM

*((volatile uint32_t *) 0x52000008) = 0x00004949; // Layer count

*((volatile uint32_t *) 0x53000000) = 0x00100008; // Stop SM

*((volatile uint32_t *) 0x53000008) = 0x00004949; // Layer count

*((volatile uint32_t *) 0x54000000) = 0x00100008; // Stop SM

*((volatile uint32_t *) 0x54000008) = 0x00004949; // Layer count

// Disable FIFO control

*((volatile uint32_t *) 0x50000000) = 0x00000000;

点积模型的输出是1024个点积相似度,每个相似度表示给定面部与数据库中记录的面部之间的相似性。如果点 积相似度的最大值高于阈值,则具有最大相似度的对象将被判定为所识别的人脸,并显示在TFT上。否则,输 出将是“未知”。

添加新对象的图像

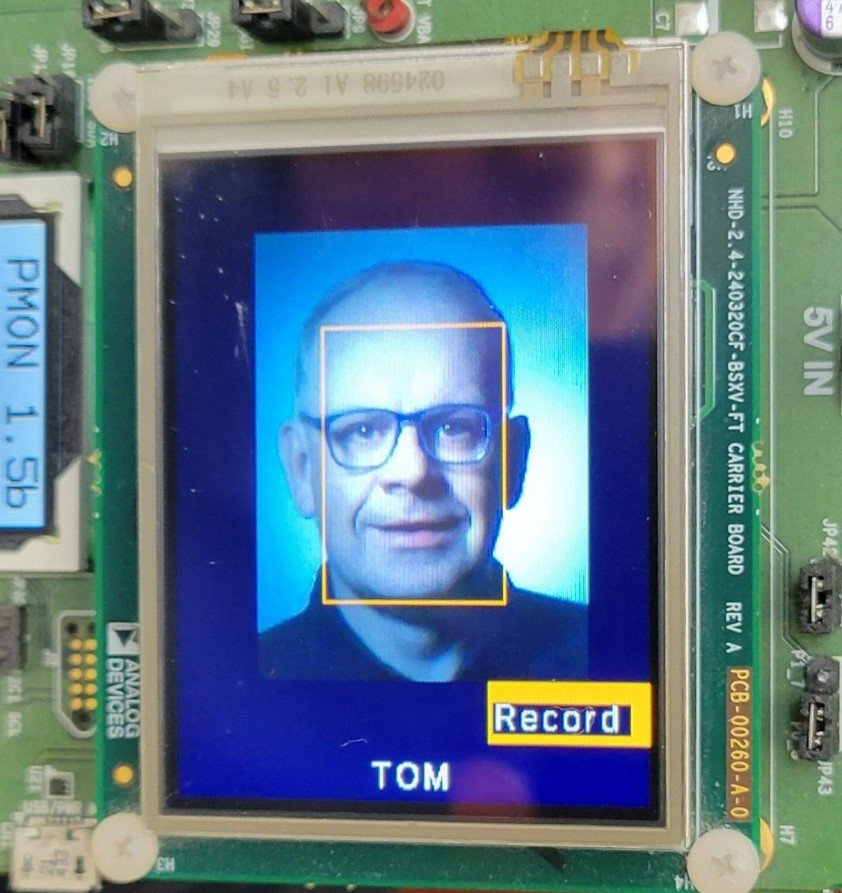

本应用允许将新对象添加到数据库中。按触摸屏上的“Record”(记录)按钮,可以输入对象姓名。

下一步是用摄像头拍摄对象的面部。按“OK”(确定)按钮可将拍摄的图像添加到数据库,或按“Retry”(重试)按钮重新拍摄。

本应用根据新对象的面部来计算嵌入向量,并将其存储在内部闪存数据库中。点积CNN模型使用嵌入向量数据库来识别对象并做出最终决策。

结语

凭借超低功耗CNN推理引擎,MAX78000/MAX78002微控制器非常适合电池供电的物联网应用。AI微控制器MAX78000 [1]和 MAX78002 [2]支持运行多个模型,从而以高效节能的方式实现非常复杂的应用。

参考文献

[1] MAX78000 数据手册

[2] MAX78002 数据手册