SIGNALS+ NEWSLETTER SUBSCRIPTION

Stay updated and leverage Signals+ latest insights, information and ideas on Connectivity, Digital Health, Electrification, and Smart Industry.

Thank you for subscribing to ADI Signals+. A confirmation email has been sent to your inbox.

You'll soon receive timely updates on all the breakthrough technologies impacting human lives across the globe. Enjoy!

CloseDRIVING 5G ENERGY EFFICIENCY WITH EDGE AI AND DIGITAL PREDISTORTION

While 5G has transformed the world and delivered on its promise of speed, low latency, and dense connectivity, it comes with a hidden cost: energy consumption. According to Ericsson, there will be 6.7 billion 5G subscribers by the decade’s end.1 Yet despite being far more efficient in transmitting data than 4G networks, the higher volumes carried by 5G will drive 4× to 5× more energy usage than legacy networks.2

Key to the challenge is power consumption within the Radio Access Network (RAN), where the majority of power is consumed—particularly at the power amplifier stage (PA). The strain of balancing power efficiency with signal distortion, wider operating bandwidth, and more rapid signal changes means that RAN developers now demand new levels of flexibility, intelligence, and real-time optimization. In short, RAN equipment needs to become more adaptable.

As 5G faces new pressures for adaptable, energy efficient systems, technologists have begun reckoning with the new capabilities offered by rapidly advancing artificial intelligence. Beyond the headlines on large language models, new opportunities are opening for localized decision-making in the real world, applying AI’s analytical strengths to complex applications in real time. Radio technologists have been investigating how these capabilities can be applied to RAN power consumption and see an opportunity.

This push to incorporate AI could not come sooner for mobile operators. In fact, McKinsey found that the impact of energy consumption on carriers' bottom lines outpaced sales growth by 50%.3 At the same time, many mobile communications providers—Verizon, T-Mobile, Bharti Airtel, among others—are committed to Net Zero targets by 2050.

Energy efficiency is a critical concern exacerbated by 5G-era networks. While 4G networks already see energy costs making up 20% to 25% of total network cost of ownership,4 the transition to 5G significantly raises the stakes. High performance 5G deployments—especially when layered on top of legacy 2G, 3G, and 4G infrastructure—can drive energy demand up by as much as 140% in some scenarios.2

TECH TALK

Three factors that drive increased 5G-era power consumption5:

Massive MIMO

The up-to-16× bigger and brighter, 64 × 64 5G M-MIMO-based cell site “lightbulbs” will require more energy in comparison to current 2 × 2 or 4 × 4 MIMO configurations. For example, a 5G base station needs 3× more power than a 4G base station.

More sites

5G-era network densification with new macro and small cell sites in urban areas will add to the increase in total energy consumption.

Mobile data traffic growth

The concept of “Bit drives Watt” means that mobile data traffic growth of up to 50% drives an increase in power consumption, despite 5G being more power efficient on a per-bit basis.

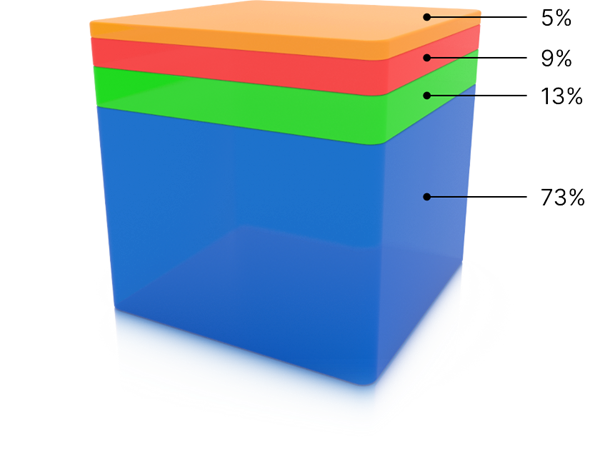

OPERATOR ENERGY USE

Source: GSMA; Energy consumption breakdown, 20216

Other Operations

Other Operations

Data Centers

Data Centers

Core Network

Core Network

Radio Networks

Radio Networks

5G’s Energy Challenge

At the heart of 5G’s energy use lies a delicate trade-off: PAs are most energy efficient when pushed to their limits—but that same operating mode distorts the signal, leading to compliance issues and degraded performance. To avoid this, most PAs run in a backoff mode, sacrificing efficiency to maintain signal fidelity.

“Memory effects” further complicate the PA’s nonlinear behavior. PAs contain components that respond slowly and “remember” past inputs, so their nonlinearity depends on the history of the signals that have passed through them.

Digital pre-distortion (DPD) resolves these issues.

How DPD Works

DPD works by intentionally distorting the signal in the opposite way that the PA would typically distort. Similar to how a tailor leaves extra room when sewing a garment, knowing it will shrink after washing, DPD pre-adjusts the signal so that when it passes through the PA, the distortions cancel each other out and the transmission stays clean and accurate.

The next evolution is clear: smarter DPD, enabled by AI.

TECH TALK

Current DPD solutions rely on a mathematical approach, Volterra-based linearization, that models PA behavior. Such models become exponentially more complex in 5G transmissions, requiring higher computation and memory resources. These requirements necessitate significant increases in chip area and energy consumption, reducing DPD's efficiency gains and value.

A New Paradigm: Machine Learning-Based DPD

Emerging techniques now leverage machine learning (ML) to replace the rigid mathematical models of traditional DPD. Instead of hand-tuning signal correction algorithms, engineers can use neural networks trained on real waveform data to model and correct nonlinear PA behavior dynamically. These models can be optimized through backpropagation and mapped directly into existing hardware structures.

Enter ML-DPD.

The benefits of ML-DPD are transformative. Optimization time can be reduced from days to hours. Model performance improves across a range of signal conditions. Energy savings become meaningful, not theoretical. Most importantly, these solutions can adapt—learning from real-time conditions and evolving as hardware and environments change.

Medium-Term DPD

While current DPD techniques are effective, evolving cellular demands are pushing the limits. To handle more data at faster speeds, power amplifiers must operate across wider bandwidths, creating rapid signal changes. These fast shifts can cause electrical instability and distortions that risk violating FCC emission limits.

To address this, researchers developed a new neural network-based model that better understands and reacts to these signal dynamics.

TECH TALK

By combining it with the Volterra-based DPD model running at the sample rate system, Medium-Term DPD (MT-DPD) was created, targeting distortion caused by signal memory effects lasting from 100 nanoseconds to 10 microseconds. Early tests showed significant reduction in distortion.7 Looking ahead, the focus may shift to tackling charge trapping—another performance hurdle for GaN.

Solving for the Long Term

Modern PA challenges go beyond static distortion. Dynamic traffic in 5G exacerbates the long-term memory effects, especially due to charge trapping in high efficiency GaN-based PAs. AI-driven approaches can model these behaviors as well, extending the power of DPD to new regimes.

TECH TALK

Layered neural networks can now simultaneously correct and adapt distortion for immediate nonlinearities, fast-changing transients, and slow-drift effects. The result: a unified system capable of delivering high signal fidelity while maintaining class-leading PA efficiency in real-world scenarios.7

ML-Based DPD: An Energy Efficient Future for Mobile Operators

ML-DPD addresses a real industry need: higher base station efficiency. This is vital to mobile carriers who invested heavily in 5G infrastructure and must reduce costs to improve competitiveness. The ability to broadcast signals at optimal capacity while staying in compliance is an advantage, enabling us to enjoy a speedy mobile internet experience.

Signal optimization is no longer just an engineering concern—it’s a business imperative. ML-DPD offers a path forward in containing 5G energy consumption.

References

1 Mobility Report. Ericsson, November 2024.

2 What Is 5G Energy Consumption? VIAVI.

3 The Growing Imperative of Energy Optimization for Telco Networks. McKinsey, 2024.

4 5G Energy Consumption: A Rapidly Changing Business Climate. STL Partners, 2021.

5 5G Era Mobile Network Cost Evolution. GSMA, 2019.

6 Energy Consumption Breakdown. GSMA, 2021.

7 ADI independent tests.