摘要

Infotainment gesture control enhances driver experience and safety by helping avoid distractions caused by fiddling with touchscreens and poorly located dials. Gesture recognition can complement touchscreens, voice, and knobs so that the driver can focus on the road. Time-of flight cameras, although fancy, are computationally heavy and expensive. A new, disruptive approach integrates the use of optics, sensing, and an analog front-end in a single ASIC, dramatically reducing cost and advancing the adoption of this important technology in a wider tier of automobiles.

Introduction

Automotive infotainment gesture control, currently only available in luxury cars, increases driver safety and comfort. With gesture control, drivers do not need to risk an accident by taking their eyes off the road while operating touchscreens or dials. With gesture recognition, drivers can adjust volumes and air flow, wave away calls on connected phones, or change a music playlist with a flick of the wrist and more. Gesture control can minimize the use of touchscreens, making them less distractive, and can complement voice.

For example, drivers can ask a virtual assistant to play a certain song and then, with a rotation of a hand, turn-up the volume while their eyes stay on the road the entire time. In contrast to gesture, the use of voice recognition requires an LTE connection back to a cloud processor to understand all dialects and languages, making a major database necessary. Voice recognition also does not work while windows are down, if a sunroof is open, or music is cranked up in the car. In addition to advancing traffic safety, gesture recognition helps deaf and mute people who are unable to utilize voice recognition technologies. Touch panels are very distracting, requiring drivers to memorize button locations and rely on tactile feel to navigate around the display. Finally, while touch-based commands may inevitably cause some wear of touched surfaces, gesture recognition technology does not wear down controlled devices.

Currently, only luxury or Tier 1 automobiles have adopted gesture control technologies, due to their high complexity and cost. But the benefits of this technology warrant every effort to broaden adoption across mid- and low-end automobiles. In this design solution, we discuss a typical application and introduce a new, disruptive approach that is highly integrated and cost-effective, enabling the adoption of gesture recognition (Figure 1) by a vastly higher number of automobiles.

Figure 1. Gesture control in cars.

Typical System

Figure 2 illustrates a typical technology that relies on a time-of-flight (ToF) camera to identify and scan a 3D scene. The ToF technique consists of sending an infrared beam to a target to be analyzed. The reflected signal is processed by an analog front-end (AFE) and the raw data is moved along to the application processor (AP) for gesture recognition.

Figure 2. Gesture control in cars.

This system has a high pixel count (60,000) and can perform eye/facial, body and finger tracking, recognize a complex gesture, and have context awareness. It produces a huge amount of data, requiring a complex MCU for processing. The use of a camera and a complex MCU makes this system versatile but expensive. ToF may offer a lot of gesture possiblities, but the complexity is too much for automotive applications, requiring customers to use a manual to program them and is not needed for everyday usage.

A Breakthrough Technology

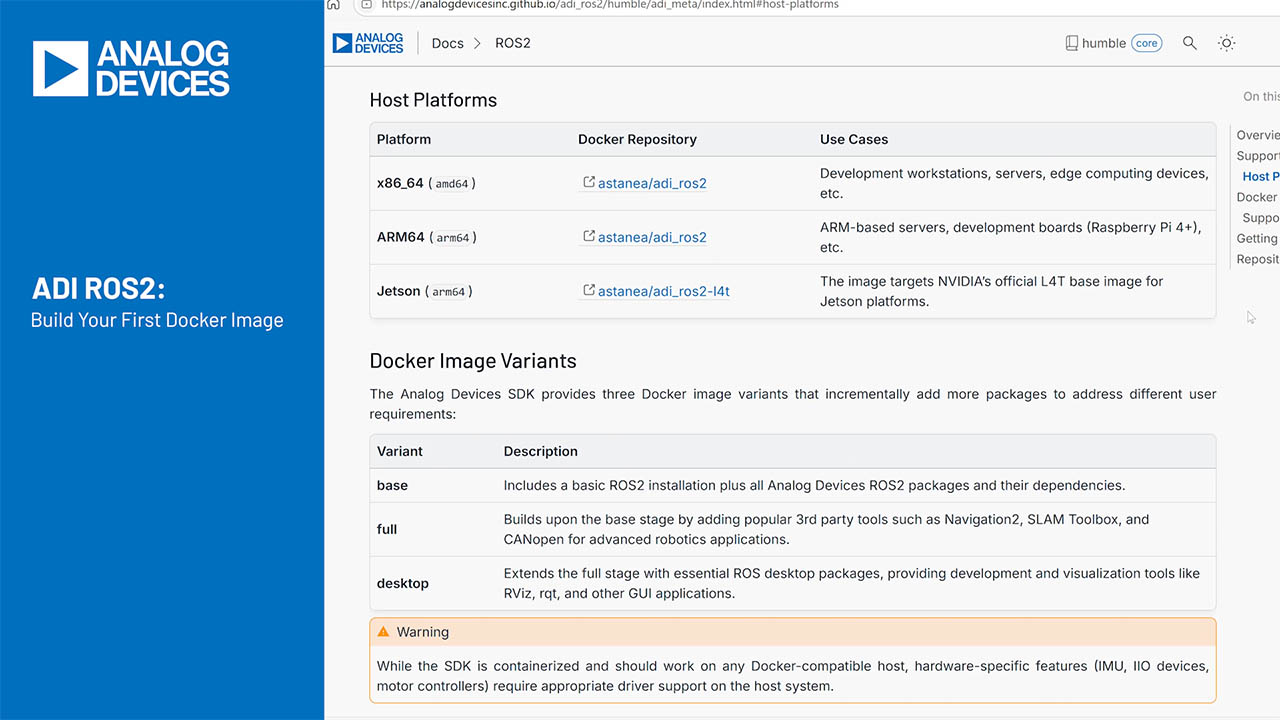

The benefits of infotainment gesture control warrant every effort to reduce complexity and cost by offering simple but important gesture control for broader use. A novel photodiode array ASIC integrates optics, a photodetector array, and an analog front-end (AFE) as shown in Figure 3.

Figure 3. Integrated gesture sensor system.

The integrated gesture sensor connects to a simple MCU via SPI or I2C bus for the recognition process. This integration is enabled by a proprietary optical QFN package (4mm x 4mm). Figure 4 shows the entire ensemble, including the sensor photodiodes on board the ASIC along with optical filtering.

Figure 4. Integrated optics and AFE cross-section.

The sensor array has to be protected from solar irradiance. At 940nm there is a dip in solar irradiance from H2O absorption in the atmosphere. This is where the sensor operates. An optical high-pass filter eliminates all solar irradiance below 875nm. (Figure 5).

Figure 5. Protecting the sensor from solar irradiance.

Four 940nm IR-LED diodes illuminate the target. The reflected light is sensed by a 60-pixel sensor array on board the ASIC, which also incorporates all the necessary signal digitalization and control (Figure 6).

Figure 6. Integrated gesture sensor ensemble.

Integrated Gesture Sensor Operation

The ‘aperture’ shown in Figure 4 includes a hole in a layer of black proprietary coating that limits the light intake. The quasi-pinhole camera approach, with a large aperture, creates a blob or blurred image, as opposed to a focused image. The 10x6 photodetector array captures the entire blob. As an example, the IR-diode array emits a sequence of light pulses of 25µs duration, each followed by a 25µs pause. The photodetector array integrates the light during illumination and subtracts it during pause. Subtracting the latter from the former eliminates the ambient light common mode, resulting in an estimate of the blob intensity. The total conversion period is 20ms which results in 50 frames per second (FPS). Each frame is passed to the MCU for processing to calculate the vector motion. The vector data is processed, and a resultant gesture event is output by the algorithm.

With a low pixel count (60), this technique allows for proximity and finger tracking, rotation and other basic but important gestures.

Application Circuit

Figure 7 shows the MAX25205 simple application circuit. The low-cost data-acquisition system for gesture and proximity sensing recognizes the following independent and important gestures:

- Hand-swipe gestures (left, right, up, and down)

- Finger and hand rotation (CW and CCW)

- Proximity detection

- Linger-to-click

- Air click

- Wave

A low-power, low-cost, non-floating-point CPU is required to process the data from the sensor. This can be an Arm® M0 or spare MIPS from another CPU. The BOM for this is minimal—a few filtering capacitors and a discrete MOSFET transistor that drives each IR-LED.

Figure 7. Integrated gesture sensor application diagram.

Conclusion

Gesture recognition improves driver safety and experience. It makes for a “cool” car experience, without the sting of a high price tag. So far, the high complexity and cost of ToF-based solutions has limited the adoption of this technology to the higher-end automobiles. In this design solution, we briefly discussed the limitations of the ToF approach and introduced a novel, disruptive gesture recognition ASIC that integrates optics, sensing, and an AFE in a small, proprietary, optical side-wettable QFN package. The ASIC, in conjunction with a cheap CPU, not only delivers gesture recognition with substantially less cost and complexity than ToF cameras but helps broaden the adoption of this newer technology to a larger class of automobiles and other consumer products.