作者:嵌入式软件工程师Sergiu Cucuirean

2025年9月24日

边缘侧AI智能更新

嵌入式工程师都清楚,在边缘侧设备上更新AI模型并非易事。通常情况下,工程师将神经网络直接烧录到固件中,寄望模型能长期稳定运行;而更新模型往往需要物理接触设备,还得进行完整的重新编程。

试想一下,若要为已部署的数百台(甚至数千台)设备更新模型,其难度可想而知。这不仅耗费时间,还需投入大量人力。但得益于ADI公司近期的技术突破,模型更新将不再是难题。

认识机器人群体:OpenSwarm

在ADI参与欧盟资助的OpenSwarm计划(openswarm.eu)期间,群体规模的模型更新是团队面临的主要挑战之一。OpenSwarm计划旨在演示并推动小型自主机器人群体中的协同AI技术落地,任务极具挑战性:

- 机器人体型小巧,导致电池容量与物理空间受限。这意味着模型更新必须具备极高的效率,需最大限度降低功耗、网络带宽占用与数据量。

- 若模型更新后安装失败,机器人需具备从这类错误中恢复的能力。

- 机器人还具备高度移动性,可能无法接入中央网络。

为何选择MAX78000处理器?

MAX78000的硬件设计专为这类资源受限场景打造:它搭载了低功耗Cortex®-M4处理器和专用的CNN加速器(配备64个并行处理单元)。此外,MAX78000 SDK能够将PyTorch模型进行打包与量化处理,并整合至固件中。

模型即数据:解锁模型更新

开发者通常会将量化后的模型嵌入为静态C语言数组,而我们则采取了不同思路,构建了一套基于固件的架构,将神经网络视为可加载的数据结构。我们将这些量化后的模型数据封装成结构化固件格式,其中包含了CNN运行所需的全部要素:层配置、量化权重、偏置值和架构元数据。

这套系统的核心是一款CNN引擎驱动程序,它能屏蔽MAX78000处理器中CNN加速器的复杂性,负责处理内存管理、算子调度、硬件同步等复杂任务。

我们的方案实现了什么效果?一套代码即可运行多个模型。这套工具集不仅缩短了开发时间,更让模型更新变得简单易行:模型成为了可独立于核心应用逻辑进行加载、替换与更新的数据;即便面对差异极大的模型,同一套CNN引擎驱动程序也无需修改就能执行。

组网传输:OTA更新如何触达每一台机器人

团队采用ADI的SmartMesh无线组网技术实现OTA模型更新。SmartMesh不依赖Wi-Fi或5G基础设施运行,能为大型动态网络提供可靠、低功耗的通信服务。在我们的机器人群体中,每个节点既充当传感器,也承担路由器的角色。即便在复杂或有遮挡的环境中,更新包也能通过Mesh网络“跳跃式”传输。借助SmartMesh,即便设备超出了中央网关的直接覆盖范围,也能安全、高效地获取最新AI模型。

OTA模型部署工作原理

由于模型已被视为数据,OTA更新可遵循简单的固件更新步骤进行:

- 固件通过SmartMesh无线网络传输

- 每台机器人接收到固件后,会检查固件的兼容性、大小及支持的网络层

- 若验证有效,机器人会在单个步骤中完成模型安装

若更新过程中出现问题,系统会回退至之前的模型,确保系统安全运行,降低停机风险。设备通过FatFS(文件分配表文件系统)将多个模型存储在SD卡中,便于进行版本测试、切换或回滚操作。

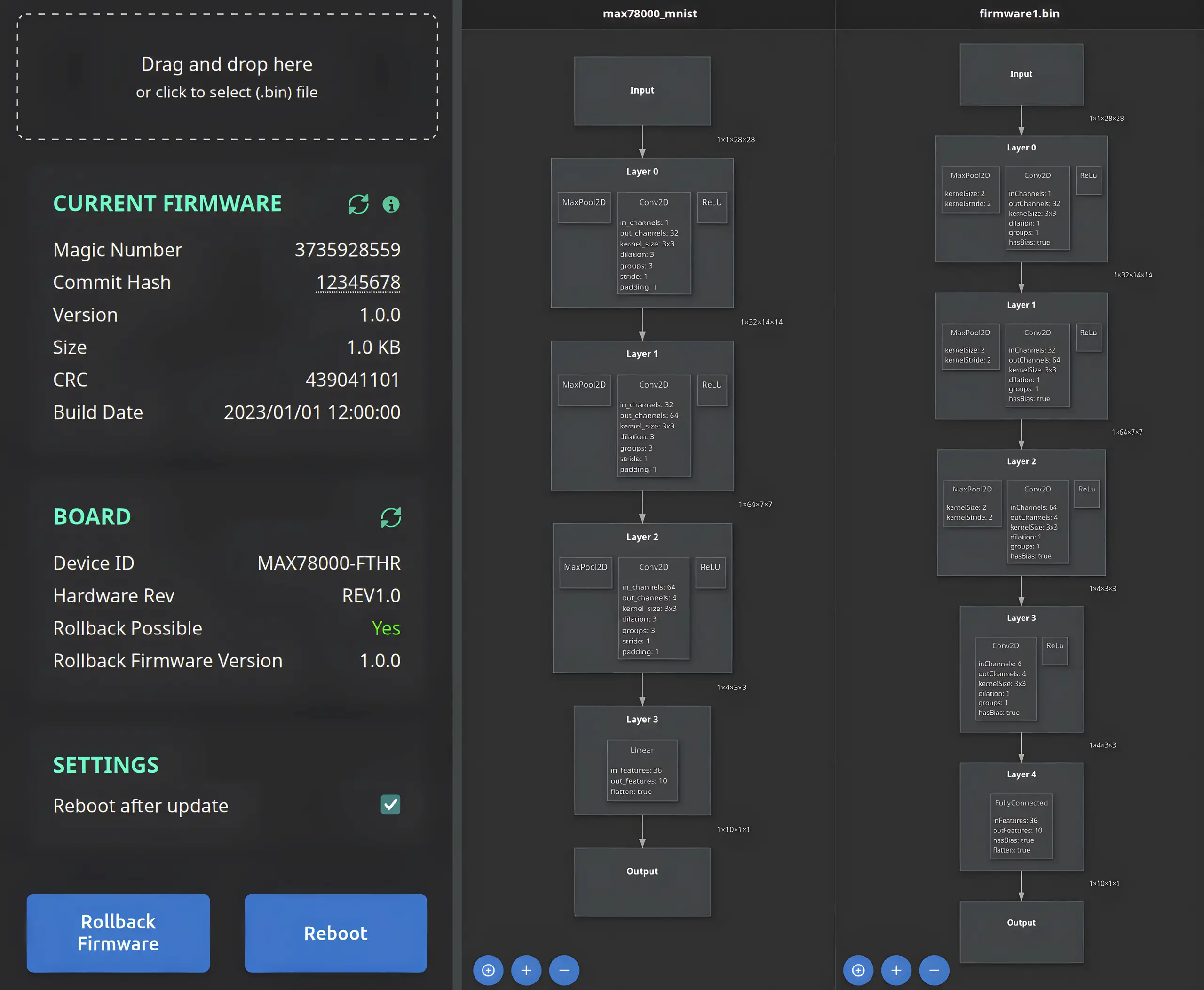

简化部署:可视化模型管理

大型机器人群体给模型管理与分发带来了挑战。为解决这一问题,我们开发了一款简洁的网页端管理工具。用户只需将模型固件拖放至浏览器即可,这款工具会对文件进行校验、加载待分发模型,并实时显示模型在机器人群体中的更新进度。

这款工具还支持用户完成以下操作:

- 查看CNN的结构

- 并排对比新旧模型

- 监控内存用量与参数信息

这款管理工具降低了技术使用门槛,助力工程师与数据科学家协同完成模型更新,无需具备嵌入式系统专业知识。

展望未来

OTA模型部署改变了我们对边缘侧AI的认知:它将智能与固件分离,使模型能够独立迭代,无需对设备进行整体重新编程。企业可借助这项技术,为边缘侧设备提供持续升级服务。尽管我们使用MAX78000处理器与SmartMesh技术验证了这种方案的可行性,但它具备广泛适用性,有望塑造下一代AI驱动系统的发展方向。

了解详情

希望实现边缘侧AI工作流的现代化升级?OTA CNN部署为嵌入式智能赋予了灵活性与可控性。欢迎联系我们的团队,或深入了解ADI MAX78000处理器与SmartMesh组网技术。

本项目获得了欧盟的地平线欧洲框架计划资助,资助协议编号:101093046。